On the Vulnerability of concept erasure in diffusion models

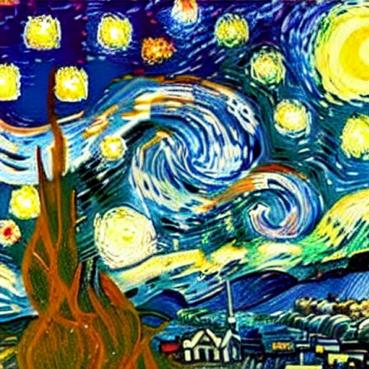

Ongoing work where we have developed an adversarial attack algorithm that reliably recalls erased concepts from models that have been “unlearned” via a wide range of techniques.

For the work in progress see our Arxiv preprint